OmniCal Overview

OmniCal is an advanced camera-based system for projection mapping projects. It includes tools to plan projection setups, calibrate and align projectors to the Designer coordinate system, align projection surfaces, adjust mesh inconsistencies and deform meshes based on captured point clouds.

One of the big frustrations of our powerful 3D workflow has always been achieving accurate 3D models and calibrating those - solutions so far have been based around laser scanning or having a skilled CAD person on site who can modify the CAD model when inconsistencies are found between the 3D mesh and reality.

OmniCal removes the requirement to have accurate 3D models using its powerful calibration and Mesh Deform tools.

View the full Product information in this Technical Specifications document.

Features

Section titled “Features”-

Robust and reliable cameras using Ethernet-based connections.

-

OmniCal captures images of structured light patterns to construct a 3D representation of projection surfaces as a point cloud.

-

It uses the point cloud to calibrate the relationship between projection surfaces, projectors and cameras.

-

The QuickAlign tool helps users to manually align projection surfaces in the Designer project to match their real world positions and proportions. This is an “offline” process which doesn’t require access to the physical stage. Multiple scene objects can be aligned separately, if their relative pose is not known in advance.

-

The single click Mesh Deform tool deforms a 3D model to match the real world (using the point cloud data).

-

RigCheck provides easy re-calibration tools. If only projectors have moved since the previous calibration this is a single click. If cameras or projection surfaces have moved then an operator will need to adjust the previous alignment using newly captured camera images.

-

The OmniCal simulation workflow allows planning with simulated cameras and lenses, view their coverage and perform test calibrations to ensure that the system and camera setup will perform as required on site.

-

Supports 360° projection environments.

-

Designed for calibration of surfaces and scenes with 3D depth.

One camera option is available:

- OmniCal MV system

- Ideal for fixed installs.

- Reliable, continuous Ethernet camera connection.

- Choice of lenses for four different fields-of-view.

- Cameras only need setting up once.

Current limitations

Section titled “Current limitations”- The quality of the calibration will depend on having suitable lighting conditions.

- Requires constant light levels during capture process.

- Works best with low ambient light levels.

- As mentioned above a Simulation must be run first to check project suitability. For large projects we recommend doing separate calibrations with groups of projectors and manually blending overlaps between the groups.

- Requires non-reflective, opaque projection surfaces (no gauzes or mirrors).

- Needs a few clearly defined feature points on the 3D mesh and real object, which can be visually identified on the camera images. Projection surfaces with sharp corners work well for example, but NOT smooth surfaces with no features.

- OmniCal requires depth from the projectors point of view. Scene depth is particularly important when using moving elements such as Automation.

Workflow

Section titled “Workflow”For a trained OmniCal operator

Section titled “For a trained OmniCal operator”Create a simulated Camera Plan

Section titled “Create a simulated Camera Plan”- Use the Disguise simulator to check project suitability.

- You can place virtual cameras and simulate a capture and calibration. You will need to have a project file with projectors and projection surfaces in the same configurations and positions as they will be on-site.

- The basic rule for camera placement is that at least 2 cameras need to see every point on your projection surface(s). Also cameras should also have a large angular separation. i.e the directions they face should not be parallel.

- The simulation will help you determine how many cameras are needed, their positions and lenses and the calibration parameters. It will also show you the ideal calibration results you should expect on-site. Note that these ideal results are without real-world influences like unsuitable lighting conditions, reflections, occlusions, movement in the scene during capture etc.

Setup Cameras On-site

Section titled “Setup Cameras On-site”- You will need to make sure the position, orientation and field-of-view of your real cameras matches your simulated Camera Plan. To help you with this, there is a camera setup editor that shows what the cameras are looking at.

- When mounting the OmniCal MV system, you need to manually adjust the physical focus and aperture (iris) on the lens, so that the images of the scene are sharp and well exposed. Exposure time can be controlled from within Disguise.

- From the Camera Setup tool, check how well Blob Detection is working. Blobs are the dots that are projected in the structured light patterns.

- You may need to adjust camera parameters (like exposure time) according to the light level to get the best results.

Capture

Section titled “Capture”- Capture is an automatic ‘one-button’ process that typically takes less than a minute. Exact duration depends on number of projectors, cameras and the resolution of the structured light pattern (number of blobs).

- Once this is complete, the physical stage is free. The next steps can be done “offline”.

Calibration

Section titled “Calibration”- You can view the point cloud after this stage and see check the calibration errors in pixels for each projector.

- You may need to adjust calibration parameters to get the best results, but usually these will be chosen automatically.

Alignment

Section titled “Alignment”- This is a manual step which aligns the point cloud with the projection surfaces in Disguise.

- Users add alignment points to camera images to line up wireframe views of the projection surfaces with reality.

- This only needs to be done once as long as cameras or projection surfaces do not move.

- Re-shape points can also be added to correct the shape of the mesh. This can be thought of as a 3D warp from the camera’s point of view.

Mesh Deform

Section titled “Mesh Deform”This is a final key step which deforms a mesh in Designer to match the real world by using the depth information from the point cloud.

For an untrained operator (recalibration)

Section titled “For an untrained operator (recalibration)”Select Camera Plan

Section titled “Select Camera Plan”A user would select a Camera Plan previously created by a trained operator which contains known good settings for Capture, Calibration and Alignment.

Rig Check

Section titled “Rig Check”This tool allows a user to compare live camera images to those from a previous Capture to check whether cameras or projection surfaces have moved. If so, the user can adjust the alignment reference points by dragging them into the correct positions.

Execute

Section titled “Execute”- A button which triggers a new Capture and Calibration using the settings from the Camera Plan.

- No user interaction is required after this point. Projectors will automatically be calibrated at the end of this process.

Hardware

Section titled “Hardware”OmniCal MV system

Section titled “OmniCal MV system”The OmniCal MV system come in kits up to 4 or 8 (depending on kit size) and are perfect for fixed installs. They are powered via Power over Ethernet (PoE), so only require a single Ethernet connection.

Lenses including 6mm, 8mm, and 12mm are incorporated into the kit depending on your project needs with a total of 24 lenses available, allowing for on the fly customisation to ensure the perfect setup.

Small Kit

Section titled “Small Kit”Upper Foam

Section titled “Upper Foam”Up to 4 Disguise MV Cameras

Lower Foam

Section titled “Lower Foam”Up to 12 Lenses

Options Include:

- Fujinon 6mm Lens

- Fujinon 8mm Lens

- Fujinon 12mm Lens

Large Kit

Section titled “Large Kit”Upper Foam

Section titled “Upper Foam”Up to 8 Disguise MV Cameras

Lower Foam

Section titled “Lower Foam”Up to 24 Lenses

Options Include:

- Fujinon 6mm Lens

- Fujinon 8mm Lens

- Fujinon 12mm Lens

Not included

Section titled “Not included”The kits do not contain network equipment like cables, switches or PoE injectors. For details on the required cables and switches please see Network Setup on the next page.

Legacy Wireless kit with iOS app

Section titled “Legacy Wireless kit with iOS app”The wireless OmniCal kit based on an iOS app is no longer supported since r19.1, see Product Compatibility table (at the bottom). Note that Apple will also remove the OmniCal iOS app from the AppStore at the end of 2025.

Field of View options

Section titled “Field of View options”The Disguise OmniCal kits offer camera and lens options to provide various needs in terms of camera coverage and Field of View angles (FOV).

The widest Field of View is with a Disguise machine vision camera on a 6mm lens. The most narrow / telephoto lens option is with a 25mm lens.

Please note that the Field of View / opening angle depends on the focal length of the lens, and also the physical size of the image sensor. For example, the camera inside a mobile phone typically has a smaller image sensor compared to the Disguise MV cameras; whereas professional film or photography cameras typically have a larger sensor. Given a certain focal length, a smaller sensor will result in a smaller FOV, compared a larger sensor.

The focal length mentioned in a lens data sheet is usually the actual value, not the 35mm-film-equivalent. Therefore focal length values can only be directly compared for cameras with the same sensor size.

In our Designer software, all information regarding focal length, 35mm-equivalents and FOV is either editable or automatically calculated when editing a Camera in an OmniCal plan.

An overview of OmniCal camera and lens combinations and the resulting field of view:

Disguise G-507 machine vision cameras have a 3.908 crop factor (compared to 35mm film) when used with approved C-mount lenses.

| Focal length | Focal length 35mm equivalent | Horizontal Field of View angle |

|---|---|---|

| 6 mm | 23.4mm | ~70.6 degrees |

| 8 mm | 31.3 mm | ~56 degrees |

| 12 mm | I46.9 mm | ~39 degrees |

| 25 mm | 97.7 mm | ~19.3 degrees |

Tips and Tricks

Section titled “Tips and Tricks”-

The fast way to tell if you have a good calibration is to look at the reprojection scores for each projector and camera in the calibration report (this is found at the very bottom) - any score below 1 pixel is considered good, similar to the error margin that would be accepted when using QuickCal.

-

Anything above 1 pixel usually indicates that something went wrong. In simulations you will normally see errors of around 0.5 pixels or below.

-

Avoid reflective surfaces as they can cause issues with calibration

-

Use surfaces with a lot of depth features as they make the calibration more accurate. It is especially important to have depth from each projector’s “point of view”. For example, if all the visible blobs from a projector land on a flat surface it will not be calibrated correctly. One way to fix this is to place an object in your environment temporarily during a capture to provide depth information.

-

Each blob needs to be seen by at least 2 cameras to be used in a calibration

-

Ensure blobs from across a projectors output can be seen. For example, if only blobs from the top left of a projector are detected it won’t be calibrated correctly.

-

Ensure a large difference in angles of attack between cameras.

-

Capture Setup is important for good blob detection - you will most likely have to change the blob size, grid density and camera exposure to suit your environment.

-

Blobs should be as small as possible while still being detected by cameras. This improves the calibration accuracy. Also if they are too large they won’t be detected at all.

-

Elongated blobs can cause higher calibration errors. Try reducing the blob size to handle this. Avoid large angles between the projector and projection surface normal e.g 45 degrees

-

More blobs doesn’t mean a better calibration. Usually the default of grid size of 32 is sufficient. Use more blobs if you require a detailed point cloud for Mesh Deform.

-

Avoid lighting gradients, if the light level changes across an image blob detection may not work as well.

-

If you a calibrating a perfectly flat surface and getting strange results, toggle the epipolar/homography camera calibration algorithm under the Plan’s Calibration Setup window and see if you get a better result.

-

If most blobs are landing on a flat surface this can skew the calibration results in favour of those areas. Enabling planar point removal in the Plan’s Calibration Setup window may improve your results.

-

It can be difficult to line up geometrically symmetrical shapes (cubes / pyramids / bowl shapes). You could embed features or identification letters and numbers into the OBJ. You can also name reference points in the QuickAlign window.

-

Surfaces without corners or visible reference points such as domes and cylinders are difficult to line up.

-

If you need to use mesh deform, use the point cloud visualisation mode to preview the results

-

Point cloud visualization affects performance, once you have verified the validity of your calibration, turn it off to ensure good performance.

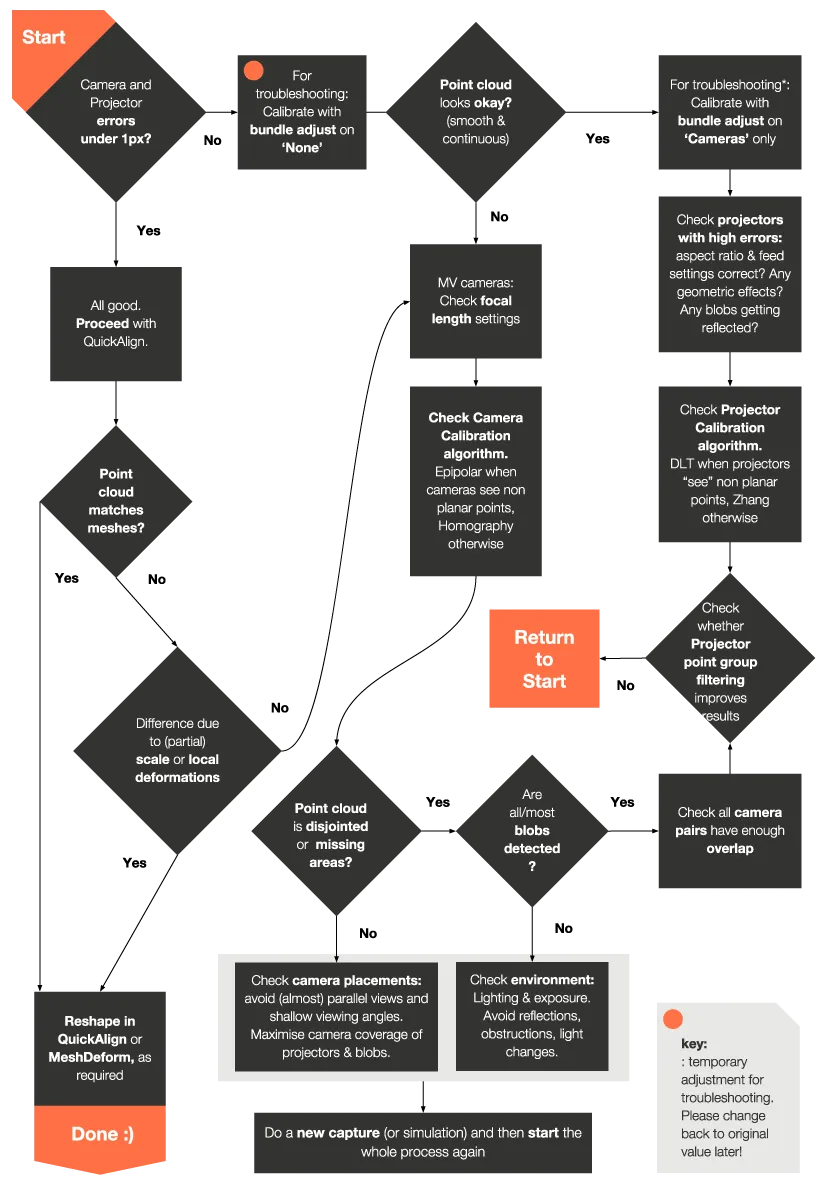

Troubleshooting

Section titled “Troubleshooting” OmniCal Troubleshooting guide

OmniCal Troubleshooting guide