Notch Layer

The Notch layer allows users to use Notch Blocks exported from Notch Builder.

Notch content creation should be approached from a similar standpoint to that of rendered content, whereby the user specifies as much in advance as possible and tests the content on a real world system before show time to reduce the likelihood of performance related issues.

Some Generative layers take their source from other layer types - either content or generative - by use of an arrow. Linking two layers with an arrow defines the arrowed from layer as the source, and the arrowed to layer as the destination. If you have an arrow between a content layer and effect layer, it is said that the content layer is being ‘piped in’ to the effect layer. For more information on arrows, see the compositing layer topic.

To draw an arrow between two layers, hold down ALT and left-click & drag between the source and destination layer.

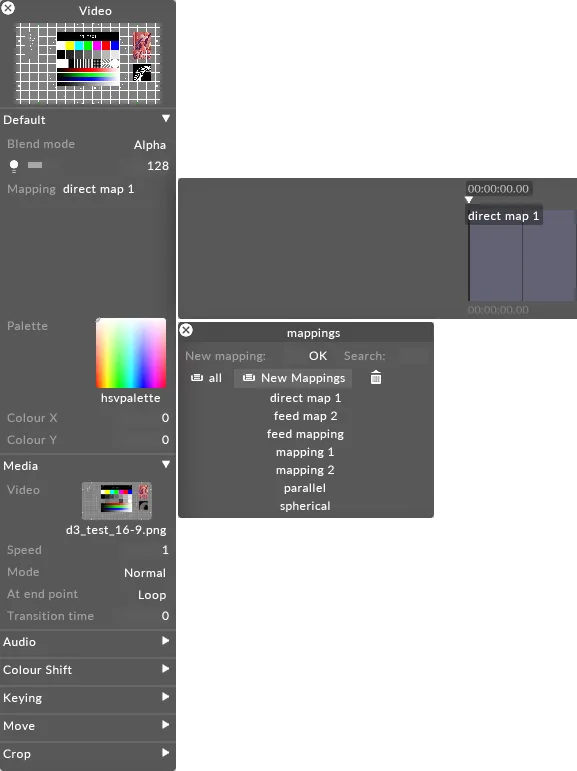

On the Notch layer, you can specify which source the layer is using (either texture or an arrowed layer) by using the Video Loader parameter. The Video parameter of the layer will show a thumbnail of either the texture chosen, or the content coming from the arrowed layer (depending on the selection made).

Notch v1.0+

Section titled “Notch v1.0+”In 2024 Notch has released Notch v1.0 private beta. Designer officially supports notch v1.0+ beta blocks from Designer version r27.7 released on 12/06/2024.

We support for Notch’s new colour management pipeline. You can find more information about Notch colour management at the bottom of this page.

The following limitations affect Notch v1.0 beta blocks:

- Windows OS must be Windows 10 1607 or higher.

- Notch v1.0 only supports Nvidia GPUs with Maxwell architecture or newer.

- Notch v1.1 only supports Nvidia GPUs with Turing achitecture or newer.

Please see the compatibility table to work out if your machine supports Notch v1.0+ blocks:

Notch advice

Section titled “Notch advice”-

Notch renders to the size of the resolution of the mapping being used on the Notch layer within Designer.

-

Rotation is displayed in degrees within Notch, but shown as radians once exposed within Designer.

-

The Y and Z axes are different in Notch and Designer, and need to be flipped/converted manually using an expression.

-

The general consensus is that one should NOT use Universal Crossfade alongside Notch. Using Universal Crossfade adds twice as much load, and depending on the effect used it is unlikely to generate the desired effect.

-

Only ever have one dfx file connected to a Kinect.

-

When using a Notch block with Kinect input, to see this input the machine will need the Kinect SDK installed. You will also need to enable Kinect input via Devices in Notch Builder.

-

For audio reactive, it’s worthwhile to define the audio device being used on the server in your notch projects audio device (device > audio device).

-

When using sockpuppet please advise the Notch content creators to create unified naming conventions for all exposed parameters (ie: FX1, FX2, Speed1, Speed2, Color1, Color2, Color3, etc.) otherwise end management will be difficult to manage.

-

Any block that stores frames (i.e. frame delay) needs to be managed extremely carefully or it may eat up all memory resources. If VRAM resources are being eaten up inexplicably, it’s worth checking whether the Notch block is storing frames for use anywhere.

-

Also, as Notch blocks are not user-definable in terms of DMX assignment order, so it is always best to pre-determine the number of attributes one wishes to have exposed in the Master block.

Workflows

Section titled “Workflows”The layer is specifically for playing back Notch blocks. Depending on your application and production needs, there are several workflows you can employ to integrate Notch effects into Designer. Below are a few recommended workflows for their respective applications. Bear in mind that these are stripped to the bare minimum elements for simplicity, and users are required to have a valid Notch Builder software with export capabilities to follow along.

For more information on Notch Builder, see here.

IMAG effects

Section titled “IMAG effects”These are probably the most straight forward effects to implement on a show, as blocks are usually designed to be plug and play, with a video source and exposed parameters to control and interact with the effect. Below we denote the workflow.

In Notch

Section titled “In Notch”-

Create a Notch effect with a node that accepts a video (usually Video Loader).

-

Expose the Video property in the Video Loader and compile the block.

In Disguise

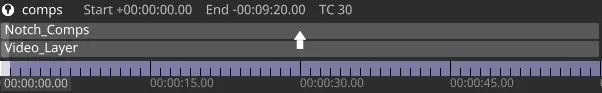

Section titled “In Disguise”- Create a Notch layer, load the IMAG Notch block into it.

- Create a Video layer (or any layer you wish to output content from, i.e. generative layers).

- Set Video Source to Layer.

- Move the Notch layer above the other layer in the stack, then arrow the video layer to it.

You should now be able to see the IMAG effect applied to any content from the layer below. You can change the effects being applied under the parameter group’s Notch Layer parameter if the effect is set up to use layers as individual effects.

-

Designer does not yet support Video selection without layer arrowing. The Video parameter under Video Sources is mostly unused, though it can still be used for placeholder images. The images displayed under Video Sources are taken from the DxTexture folder.

-

If multiple Notch layers are used and you wish the arrowed video to be the same for all of them, you will need to set up the exposed parameter’s Unique Identifier in Notch to be the same for all exposed video sources.

-

If multiple layers are arrowed into an effect that accepts multiple sources, the source layer is chosen in order of selection (i.e. the first layer selected will be the first Video source, the second will be the second, etc) regardless of the order of the parameters themselves within the list.

3D virtual lighting simulations

Section titled “3D virtual lighting simulations”These workflows often involve a 3D mapped object with projectors simulating light sources moving and affecting the object in real-time. These effects rely on the virtual 3D space to match the real space and object, along with the coordinates systems of Designer and Notch.

In Notch

Section titled “In Notch”- Add a 3D object node (or a Shape 3D node, etc) and add it to the scene.

- Add a light source.

- Create a UV camera node to output the lit textures onto the object’s UVs.

- Expose the appropriate parameters (light positions, object positions, etc).

- Compile and export the block.

In Disguise

Section titled “In Disguise”- Create a surface with the same object used in Notch.

- Calibrate the projectors to the surface with your preferred calibration method.

- Create a Notch layer.

- Apply the Notch layer via Direct mapping to the object.

- Move the lights around to see the object UVs being affected.

-

For the Notch scene to match the scene within Designer, accurate measurements need to be taken on stage and an origin point reference needs to be determined from the start. Setting an origin point early in the process will make the line-up process easier.

-

Lights can be linked to MIDI, OSC, or DMX controls like every parameter in Designer, or can be keyframed and sequenced on the timeline.

-

The mapped object’s movement can be linked to automation or tracking systems, and the positional data can then be used to drive the exposed position and rotation parameters.

-

If multiple objects are in a scene, you will need to create a larger UV layout that accommodates each object in a separate UV area, and then match the overall lightmap resolution by setting the surface resolution within Designer. You can use the UV Output section of the 3D objects node in Notch to determine where in UV space a specific mesh will be output within the overall canvas.

Particles systems and tracking regions

Section titled “Particles systems and tracking regions”A very common application of Notch is to use it alongside tracking systems such as BlackTrax to generate particles from specific points in space, to be either projected on a surface or displayed on an LED screen. Below is a broad outline of the workflow using an LED screen, the BlackTrax system, and region camera to specify the tracking regions:

In Notch

Section titled “In Notch”- Create a particle system (Minimum required: Particle Root, Emitter, Renderer).

- Create a Region Camera.

- Expose the position parameters of the Particle Emitter, as well as the Region Camera’s Top Left X and Y, and Bottom Right X and Y.

- Compile the block, and export to Disguise.

In Disguise

Section titled “In Disguise”- Create an LED screen. This can be placed anywhere in the virtual stage, though it is recommended to place the LED in the correct position to match the physical space.

- Ensure the BlackTrax system is connected and tracking data is being received from the beacons, then select a stringer to use as a tracked point for the Notch particles.

- Create a Notch layer and load the exported block into it.

- Set the Play Mode to Free-run, or press play on the timeline for the particles to begin spawning. They are a simulation and only spawn over a span of time.

- Right-click on the BT point being tracked to open a widget that displays the point’s current coordinates in 3D space.

- With the Notch layer open and the Particle Emitter position parameters visible, navigate to the tracked point’s widget, hover over one of the position values, then Alt + left-click and drag an arrow from there to the corresponding position parameter in the Notch block.

- You should now see the particle effect either disappearing off-screen (if the beacon is not in range of the LED screen) or moving towards it.

- If the world coordinates of Notch and Disguise do not match, and the particle effect is limited to a particular screen or mapped area, a Region Camera can be used to mark the boundaries of the tracking region instead.

- To set the region camera, simply measure the XY position of the top-left corner of the LED screen, and do the same for the bottom-right, and enter these values in the exposed Region Camera parameters.

-

An often quicker method of finding out the region camera values is to place a tracked BT point on the top left of the screen, and then the bottom right, and manually enter the xyz coordinates displayed on the tracked point’s widget, as they are sure to match precisely.

-

A common mistake that leads to oddities with tracking regions is when the wrong axes are used. As a rule of thumb, for vertical surfaces you’ll need to take the X and Y position of the top left and bottom right corners, whereas for horizontal surfaces (i.e. for effects built to be displayed on the ground) you will need X and Z. This is also dependant on how the Notch block itself was built and the orientation chosen in the region camera node, so it’s important to double-check these details beforehand.

-

When using the region camera, particle size plays a fairly important role. It may be advisable to expose emitter size, particle size, and camera distance to achieve the desired result.

-

If multiple machines are outputting the same set of particles but are seeing a different result, it is because both particle roots are running separate instances of the simulation on two machines. You can fix that by setting the Particle Root node for that emitter to Deterministic by ticking the box in the node editor in Notch Builder.

Notch Layer Properties

Section titled “Notch Layer Properties”Notch layers are comprised of a set of default properties (detailed here) and additional properties that appear depending on what is actually in the Notch block. For explaination of properties beyond the default, please refer to your Notch content creator.

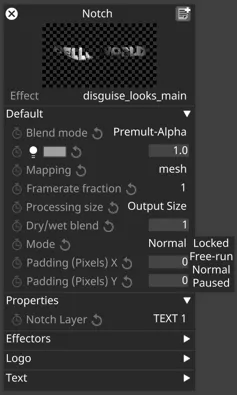

Effect

Section titled “Effect”The Effect parameter defines which Notch DFX File the layer is looking at.

Blend Mode

Section titled “Blend Mode”Blend Mode controls how the output of the layer is composited with the layers below.

Brightness

Section titled “Brightness”This property (which appears as a light bulb icon) controls the brightness of the layer output.

Mapping

Section titled “Mapping”The mapping property controls how the layer output is mapped onto the screen(s) in the Stage level.

For information on mapping, including how to use the different mapping types offered by Designer, please see the chapter Content Mapping.

Processing Size

Section titled “Processing Size”There are two options:

Output size - The resolution of the screen the effect is mapped to (not the mapping itself)

Input size - The resolution of the effect as set in the Notch Builder.

Dry-Wet Blend

Section titled “Dry-Wet Blend”Global Intensity level for the effect on a scale of 0-1.

Framerate Fraction

Section titled “Framerate Fraction”The Framerate Fraction setting indicates the fraction of the project refresh rate this layer renders at. For example, if project refresh rate is 50Hz, and this setting is 1/2, the layer will be rendered at 25Hz, every other frame.

The options are 1, 1/2, and 1/3.

This specifies the playback mode.

There are four modes; each one has a specific behaviour that is useful for a different situation.

-

Locked

Section titled “Locked”

When the play cursor stops, the block will also stop and the frame number will lock to the timeline position. When the cursor continues to play or holds at the end of a section, the block will also stop. Jumping around the timeline while playing will cause the block to jump to its corresponding point on its own internal timeline. Note, locked behaviour does not apply to effect type nodes in the Notch block.

-

FreeRun

Section titled “FreeRun”

If the play cursor continues to play or stops at the end of a section, the block will play continuously. Jumping around the timeline while the cursor is playing or has stopped does not affect which frame is being played.

-

Normal

Section titled “Normal”

When the play cursor stops, the block will also stop and the frame number will lock to the timeline position. When the cursor continues to play or holds at the end of a section, the block will play continuously. Jumping around the timeline while playing does not affect which frame is being played.

-

Paused

Section titled “Paused”

The block will pause on the frame at which the Paused playmode was selected. Moving the play cursor around the timeline in any way will not affect which frame is held. Leaving the layer and re-entering the layer will also not affect which frame is held.

Padding X, Padding Y

Section titled “Padding X, Padding Y”This specifies the number of pixels to add in the X and Y directions for rendering, but cut off before compositing. This is useful for removing any screen-space edge artifacts. The pixels added are symmetrical to both sides.

Notch Colour Management

Section titled “Notch Colour Management”This section outlines Notch colour management tools and conventions in Notch.

Before Notch v1.0

Section titled “Before Notch v1.0”Before Notch v1.0 all blocks expect inputs and output in sRGB Gamma 2.2 (x^2.2) colour space. This cannot be changed.

Notch v1.0+

Section titled “Notch v1.0+”Notch v1.0 introduces colour management options. You can now set the input/output format of a notch block to one of the following settings before exporting the Notch block:

- sRGB Linear

- sRGB ‘Gamma 2.2’ (Note: This actually uses the standard sRGB curve, not Gamma22 as the name might suggest)

- ACEScg

When Designer is set to Disabled or Gamma Colour modes, Designer will pass all textures in as sRGB Gamma 2.2. When Designer is in ACES or OCIO Colour modes, Designer will automatically detect the input and output transforms that the Notch block expects and will convert between Notch and Designer’s working space when passing textures in and out of the block. This conversion is free when setting the Notch block to ACEScg mode as Designer also uses an ACEScg working space.

For more information about colour management in Designer see our colour transforms page.

For more information about colour management in Notch see Notch’s v1.0 manual here.